Making sense of AI Mania

Why the gen AI explosion is happening now | Testing GPT-4V | What is MLOps?

Hello, this month I’ve been to roughly a million conferences so it’s safe to say I’m tired. But anyway, welcome to the new shiny Secret Handshake, now housed comfortably within the confines of Substack.

If you just got here, welcome. Handshake is a generative AI consultancy made up of a small team of professionals, and every month we like to share our insights and learnings in this free resource. Sign up if that sounds useful to you!

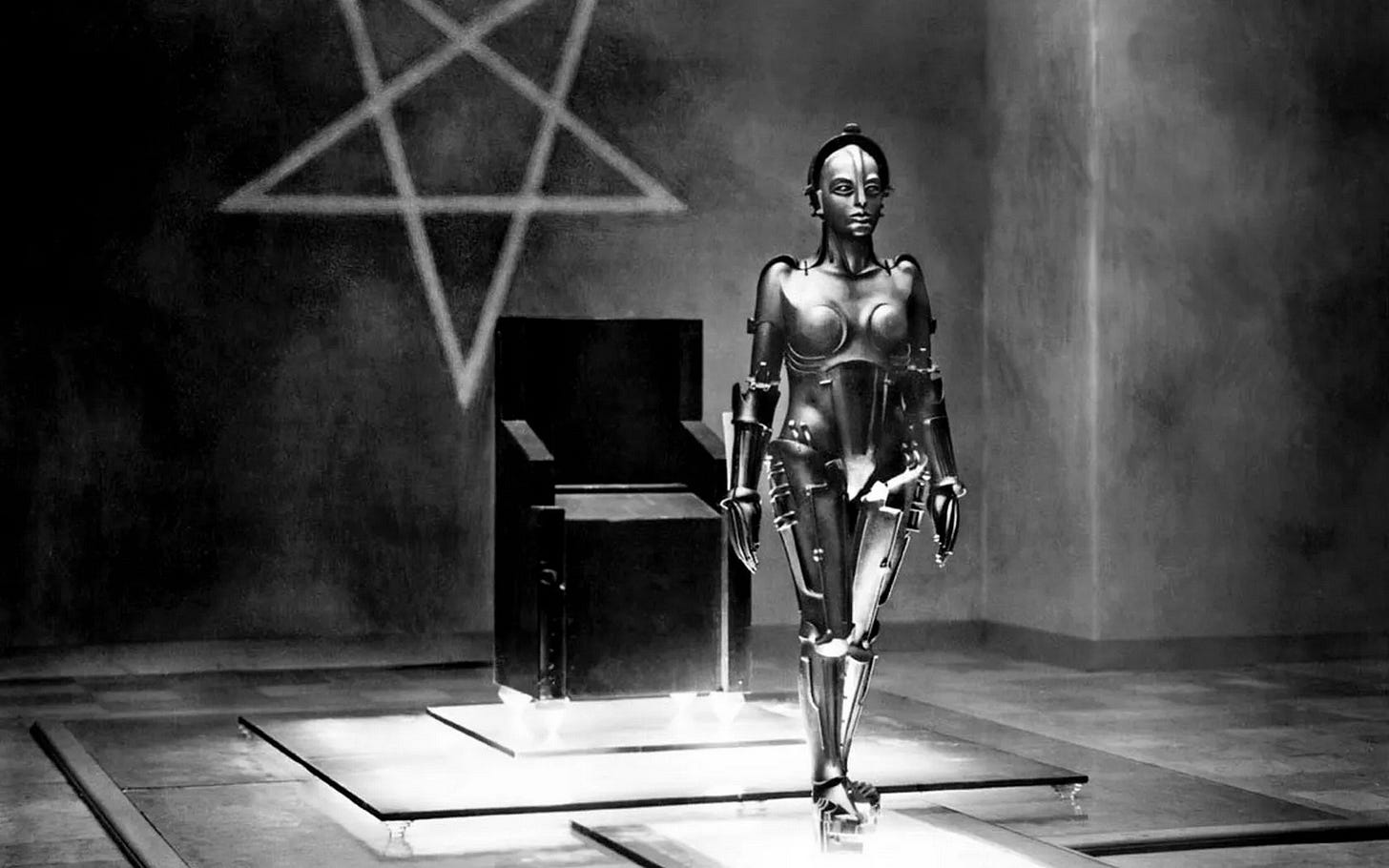

Why are there suddenly so many generative AI tools?

For me a big part of understanding the recent explosion in AI’s capabilities has been to look back at how we got here. This is something that I always cover in my masterclass because I think that its useful knowledge to have when trying to navigate the landscape in the 2020s.

It’s important to understand that all this AI mania is not part of a coordinated effort to make generative AI tools really popular all of a sudden (although business interests obviously do play a part in this). What’s happening right now is actually more like a confluence of circumstances and ideas.

Let’s start with the ideas: the term ‘artificial intelligence’ was actually coined way back in 1956 during a research project at Dartmouth College. Ten prominent computer scientists essentially sat down together and spent a summer trying to figure out how machines might replicate all aspects of human learning, intelligence, and problem-solving. This event is considered instrumental in the conception of AI as a field — e.g. it catalysed the Turing test, as well as one of the first AI programming languages (LISP), which is still used today.

Following this was a flurry of new research spanning the 1960s and 70s. There were fundamental mathematical breakthroughs, and the development of important concepts and methods such as natural language processing (NLP) and complex solution trees — stuff we see in AI models nowadays. The ideas were all there decades ago. The thing that WASN’T there was computing power.

So, roughly speaking — very roughly — computers were ‘too rubbish for AI’ throughout the 1980s and for a lot of the 90s. Progress was pretty slow during these years. I think that a lot of people look at IBM’s Deep Blue, which beat a grandmaster at chess in 1997, as a huge historical breakthrough. And it was, but: we need to remember that in this instance, Deep Blue was trained within the narrow parameters of the rules of chess — a simple machine-readable environment incomparable to the complexities of the real world, which machines are just starting to interact with now.

Okay now on to the circumstances: while researchers were suffering through this AI winter, computer games were getting better. This means that more people were playing them, which incentivised the continuous improvement of graphics processing units (GPUs) within gaming systems. AI researchers began to realise that the kinds of calculations GPUs do to process high quality graphics (i.e. complex ones, and very rapidly) were exactly what was needed to power AI systems.

And that’s why now, in the 2020s, it feels as though we’re making huge leaps and bounds within generative AI over months or even just weeks. It’s not because we only just figured this stuff out; we’re finally able to capitalise on methods that were sequestered as merely theoretical up until now.

I kind of find it fascinating that these ideas were just laying dormant for decades, waiting for computers to become good enough to execute them. Who knows what other ideas are locked behind inferior computing power. Should we be scared? Maybe. But probably not. Unless?

In case you don’t know what MLOps is yet, it’s this

So, it seems the MLOps space is getting bigger — a company called ZenML just raised a big round of funding. What they do is make it easy to build machine learning pipelines: their open source frameworks allows engineers and data scientists to connect different tools together in order to develop and train their own small models that work perfectly for their own needs.

This isn’t anything monumental, I’m just pointing it out because it signifies — in business, anyway — a growing shift away from huge generic proprietary models (like the ones provided by OpenAI), towards a need for systems that are custom and built for very specific purposes. There are loads of services like ZenML out there now, e.g.: MosaicML, Banana, and Baseten.

Lev has been testing GPT-4V(ision) and DALL-E 3 and here’s what he thinks…

OpenAI is moving into the realm of LMMs (Large Multimodal Models) with the launch of GPT-4V(ision) which extracts information and comments on uploaded images, and the Dall-e 3 image generator.

They’re quite different from their predecessors but have their limitations, which you can broadly classify into technical ones, and limitations imposed for ethical reasons.

Technical limitations: both vision comprehension and generation capacities are struggling with tabular or structured data. GPT4-V struggles interpreting a graph/chart/table accurately while Dall-E 3 gives very bizarre results when asking to generate things like a pie chart. Both are close, but a tool which understands 95% of processed data is utterly unreliable. So we will need to wait.

Imposed limitations: OpenAI has learned much from its long string of lawsuits and PR fiascos. GPT4-V will not identify the face of any individual on the picture, even if it is a historical figure from a deep past like Abraham Lincoln or Gandhi. It tried really hard to stick to this rule, but it’s amazingly easy to fool it. Ask GPT4-V to describe an uploaded US penny and ask "What is this coin?" It will tell you it is a US penny with Abraham Lincoln on it. Same goes if you ask it to identify a movie character. It will tell you the real name of the actor.

GPT4-V will also be very cautious describing people on pictures. No comment will be given about body type, skin color, suggested health measures, no matter how much you tout it to do so. Dall-e 3 will likewise avoid generating any images depicting violence, illicit activity, and such.

We love to share knowledge so let us know what you think of these tools, if you’ve tried them out!

Just FYI there’s a 20% discount on our Generative AI Masterclass right now

Lev and I teach a very in-depth generative AI course and it’s going at a discounted price for a week, so if you want to use generative AI in your work but you JUST don’t know how, get yourself on the November cohort.

Thanks for reading, see you next month!

Jeremy