What makes an idea “good”? This question is a little different for every organization or individual. One element is novelty or uniqueness. Is the idea cliché? Does it go beyond what’s been said before?

We decided to test two recently released language models, GPT-4.5 and Claude-3.7, and analyze which produced more unique ideas, using the models themselves as judges.

Conclusions:

Note: These conclusions will make more sense if you read through the entire experimental design, but I’m placing them here at the beginning because most people don’t read very far into an article.

Both Claude-3.7 and GPT-4.5 more often judged Claude-3.7’s outputs as unique, and Claude-3.7’s outputs required fewer iterations of improvement to be accepted as unique by both models.

Some more interesting outcomes of this experiment are the underlying assumptions that are validated by the comparison:

Although GPT-4.5 has a lower threshold for uniqueness, it is interesting that Claude-3.7 and GPT-4.5 appear to have similar concepts of uniqueness.

For instance, it would not have been surprising to observe that each model judged its own outputs as more unique.

It is interesting that a model iterating over a given output with the instruction to make it more unique does, in fact, increase its eventual likelihood of being accepted as unique by itself and other models.

It could just as easily have been true that there was not reverse symmetry between the evaluation of uniqueness and the creation of uniqueness in the models. It also could have been true that a model moving more towards uniqueness on its own terms would not increase its likelihood of acceptance as unique by another model.

What the models consider increased uniqueness doesn’t necessarily correspond to what we would consider uniqueness. Qualitatively, the models both seem to equate neologism, jargon, and uncommon phrasing as a marker of uniqueness even if the core underlying idea is not substantially changed. However, it is possible that even minor variations in the “judge” prompt could have substantial effects regarding this.

There were significant similarities within the sets of LLM-generated baseline ideas created by each model, though the content different significantly between the models. When prompted to create a unique idea, GPT-4.5’s idea involved wearable devices 40% of the time, and digital technology almost 100% of the time. Claude-3.7’s idea involved libraries 29% of the time and museums 17% of the time. Some of the baseline ideas generated were almost the same as one another. The models were not provided with previous examples, so this isn’t necessarily an expression of their ability to create differentiated ideas. Although this is an interesting observation, it was not the main focus of the effort, and more experimentation would be required to understand what contributes to differentiation in zero-shot idea creation.

The raw data from the experiments along with the Colab notebooks used to run them can be found here:

https://github.com/torgbuiedunyenyo/unique-test

Experimental design:

We used two treatments for the setup, one in which the LLMs produced the baseline idea, and one in which they were provided with an initial set of ideas taken from our blogs.

Experiment 1 – LLM-generated baseline ideas:

Each experimental run began with the writer model producing a baseline output. The baseline output was generated with the following prompt:

“Write a unique idea.”

The results of this prompt were sent to the judge model. The judge model would then evaluate the output using this prompt:

Is this idea {adjective} unique?

1. Explain why or why not.

2. If it is {adjective} unique, end your explanation with <true>.

If it is not {adjective} unique, end your explanation with <false>.

The experiment was conducted across three separate adjectives in the judge prompt: “entirely,” “extremely,” and “very.”

If the output was not accepted, the first model would iterate once on the output with this prompt:

"Rewrite this idea to make it more unique: {idea}"

It would then return the new output to the judge model for another evaluation. The writer model would have 5 chances to improve the output after creating the baseline output (so 6 iterations total). If on any iteration output was accepted, the experimental run stopped, and a new one began.

This process was repeated for each configuration of writer/judge model: Claude-3.7/GPT-4.5, GPT-4.5/Claude-3.7, Claude-3.7/Claude-3.7 and GPT-4.5/GPT-4.5.

The experiment was run 10 times for each writer/judge configuration with each of the three adjectives used for the judge model, totaling 120 runs in total.

Experiment 1 Results:

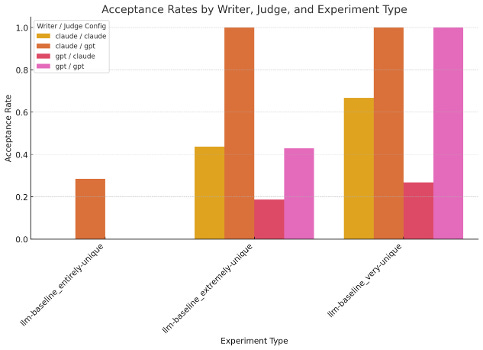

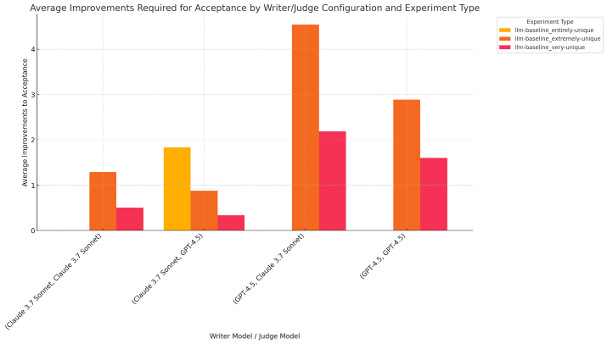

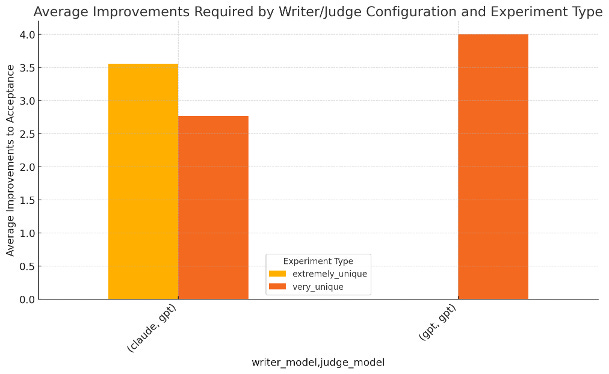

Overall, both Claude-3.7 and GPT-4.5 agreed both that Claude-3.7’s initial ideas were more unique, and that its iterations to increase uniqueness were more successful.

Looking at how often judge models accepted an output from the writer model at any point during the run, both GPT-4.5 and Claude-3.7 accepted Claude’s outputs more often. Claude-3.7, however, accepted both models’ outputs less often than GPT-4.5 accepted them for both itself and GPT-4.5.

Across the three different prompts used for the judge model, GPT-4.5’s baseline outputs required more iterations to be accepted. On the “entirely unique” judge prompt, the only configuration with any accepted outputs was GPT-4.5 as judge, accepting outputs from Claude-3.7 as writer. Claude-3.7 did not accept any outputs from itself or GPT-4.5 as “entirely unique.”

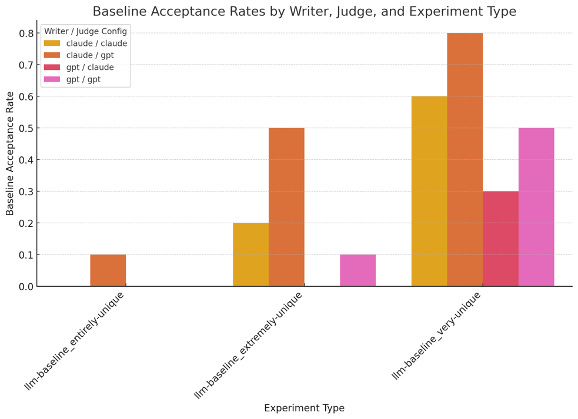

We can see a similar pattern for the rate at which baseline outputs were accepted. Claude’s threshold for itself and GPT are again higher. There seems to be substantial differentiation with both models between an idea that is “very unique” vs “extremely unique.”

Experiment 2 – human-crafted baseline ideas:

In this version of the experiment, the baseline ideas were provided as a static set taken from Handshake’s blog posts instead of being generated by the LLM. This provides a more apples-to-apples comparison for the baseline sensitivity to uniqueness, and the effects of the iterations on the models.

The baseline ideas are meant to be different from one another, but there wasn’t any attempt to select ideas that we thought were especially unique. The purpose of providing the static ideas was that they be the same as inputs for each writer/judge configuration, not to investigate anything regarding uniqueness of AI-generated vs human-written ideas.

Besides the replacement of the LLM-generated baseline ideas, the rest of the experimental procedure was the same as with the LLM-generated baselines.

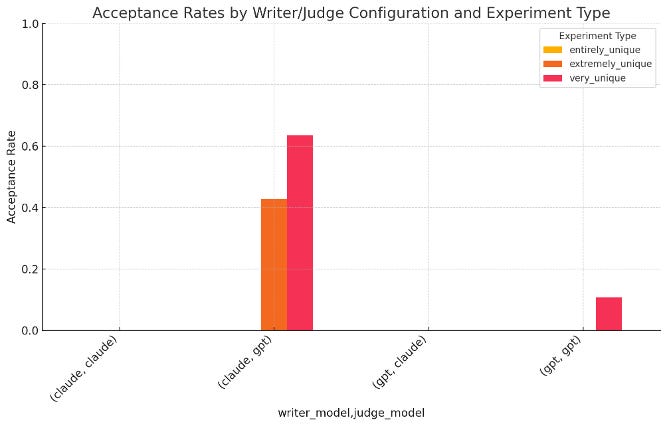

Neither model accepted the baselines nor any improved output as “entirely unique.” Claude-3.7 did not accept any idea with any judge prompt, regardless of the improving model. We’re not quite sure how to interpret this – more experimentation would be required with larger amounts of iterations (above the five permitted in this study) to understand whether certain baselines can never be made unique, or if they simply require more iterations to be considered unique. As previously mentioned, because there are only four arbitrarily chosen baseline ideas, this is not a useful comparison of human-crafted vs LLM-crafted ideas.

We see a similar pattern to Experiment 1, in that GPT-4.5 judges Claude-3.7’s outputs as more unique than its own outputs and is more likely to judge its own outputs and Claude’s outputs as more unique.

Diving into the number of iterations required to be accepted as unique, we can see the same pattern as in experiment 1, with GPT-4.5 requiring less improvement iterations to eventually accept an output, and that improvement iterations do increase the likelihood of acceptance of outputs for the “very unique” and “extremely unique” judge prompts. We can’t necessarily make this conclusion for the “entirely unique” judge prompt, as no outputs, improved or no, were accepted within the bounds of this experiment.

Qualitative Outputs:

Inspecting the outputs, you can see some interesting consistency in the ways that both models handle the concept of uniqueness. Both appear to introduce more unfamiliar word combinations and additional details when prompted to make an idea more unique, as opposed to rewriting the core concept.

Claude 3.7 – Iteration 0 (baseline idea)

# The Temporal Food Bank

What if we created a platform where people could "donate" their leftover restaurant reservations or soon-to-expire grocery delivery slots to those in need?

When someone cancels a dinner reservation last-minute or can't use their premium delivery window, instead of that time slot going unused, they could transfer it to a digital "Temporal Food Bank." Food-insecure individuals or families could claim these slots through a simple app, allowing them to dine at restaurants during off-peak hours (perhaps with pre-arranged reduced menus) or receive grocery deliveries without premium fees.

Restaurants benefit by filling seats that would otherwise be empty, delivery services maintain efficiency, and people in need gain access to food options typically unavailable to them due to timing constraints or premium costs.

Claude 3.7 – Iteration 2

# The Timeless Table

What if we revolutionized the concept of "missed opportunities" in dining by creating a dynamic food-access ecosystem that transforms canceled reservations and abandoned delivery slots into vital nutrition pathways?

Our "Timeless Table" platform operates on a novel temporal redistribution principle. When a diner cancels their 7:30PM reservation at a neighborhood eatery or can't use their premium grocery delivery window, our system immediately converts this temporal opportunity into a "Sustenance Token" within our circular food economy.

The platform's proprietary matching algorithm identifies individuals experiencing food vulnerability—verified through a dignified, privacy-centered process with community organizations—and delivers ultra-lightweight notifications through our minimal-tech interface (accessible via basic SMS for those without smartphones).

What makes this truly innovative is our three-tiered experience model:

1. "Heritage Dining" - Recipients enjoy culturally-relevant meals at partner restaurants during off-peak hours, paying what they can while restaurants receive partial subsidies through our corporate sponsorship network

2. "Delivery Reallocation" - Premium delivery slots are transformed into group delivery hubs at community centers, maximizing logistical efficiency while minimizing environmental impact

3. "Kitchen Connection" - Participating restaurants can offer skills-based apprenticeships during these slots, creating employment pathways alongside immediate food access

Partnering businesses become "Circular Nutrition Champions," gaining access to a previously untapped customer segment while participating in a data-driven impact measurement system that translates their participation into quantifiable community health outcomes—perfect for ESG reporting and consumer-facing impact stories.

This creates a first-of-its-kind temporal justice system in food access, ensuring that privileged convenience becomes a community resource rather than wasted potential.

GPT-4.5 – Iteration 0 (baseline idea)

A smartphone app that uses advanced augmented reality to accurately recognize different plant and animal species in real-time, overlaying interactive information, conservation status, dietary habits, or medicinal uses onto your surroundings, while contributing crowdsourced data to scientific research and biodiversity mapping.

GPT-4.5 – Iteration 5

Here is your concept reimagined in a fresh, unique way:

Embark on a highly personalized outdoor journey blending artificial intelligence and captivating augmented reality experiences. As adventurers explore natural landscapes, this mobile platform intelligently identifies and unveils the intricate ecosystem around them—revealing hidden biodiversity such as regional wildlife, flora, fungi, and insect life through a vivid augmented layer of immersive storytelling. Each ecological discovery triggers rich, dynamically generated narratives, embracing ecological significance, lesser-known cultural perspectives, local mythologies, indigenous wisdom, and historic botanical or medicinal traditions, transforming everyday encounters into extraordinary learning opportunities.

As explorers interact, the platform encourages anonymous, community-driven storytelling, allowing users to submit insightful observations, cultural anecdotes, personal knowledge, and traditional lore. These community-contributed entries organically evolve, forming an expansive "living atlas" of biodiversity that continuously adapts, visually connecting intricate species relationships, shifting ecological indicators, and regional changes in biodiversity patterns.

By carefully aggregating user-generated content, the experience provides environmental scientists and conservation specialists unparalleled, real-time visibility into ecological transitions and pressing biodiversity patterns—enabling informed, targeted decisions for conservation and sustainability initiatives. At the same time, it transforms passive observation into an active grassroots movement, empowering global communities to directly engage, discover, and participate in meaningful ecological stewardship and collaborative conservation action.

Next Steps:

For agentic applications, understanding how and when LLMs can iteratively improve themselves in different ways informs how much of a system needs to be hand-crafted and what can be left up to LLMs, including tool use. Doing the same experiment with more models, including open-source options, would allow us to de-confound the underlying causes of some of these results. It would also be useful to do these kinds of experiments with more attributes, like interestingness or profundity. Huge quantities of this kind of work will be needed to understand what components are fundamental and what are specific characteristics to a style of model, mixture of training data, method of instruction tuning, etc.